|

|

# 2024 In-network Machine Learning |

|

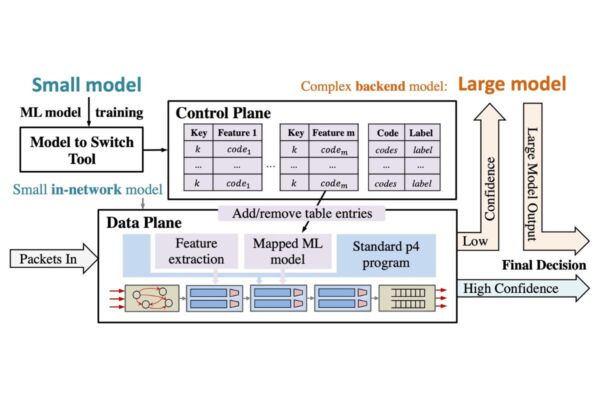

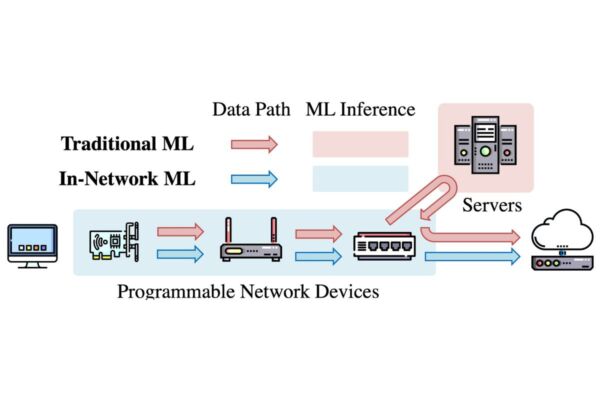

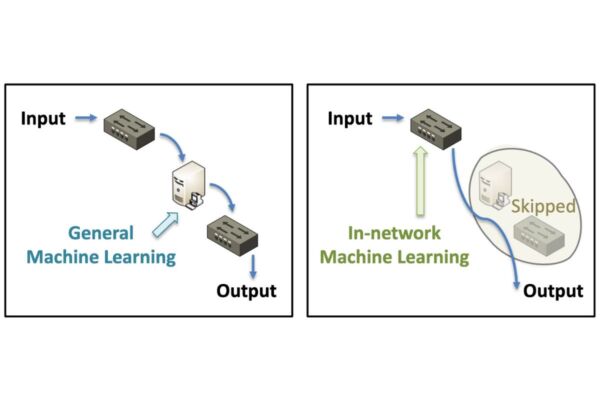

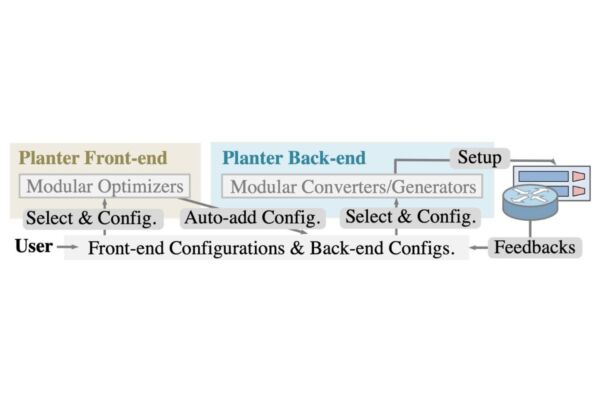

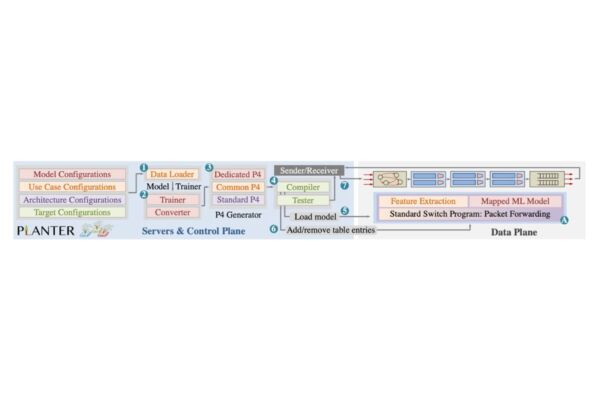

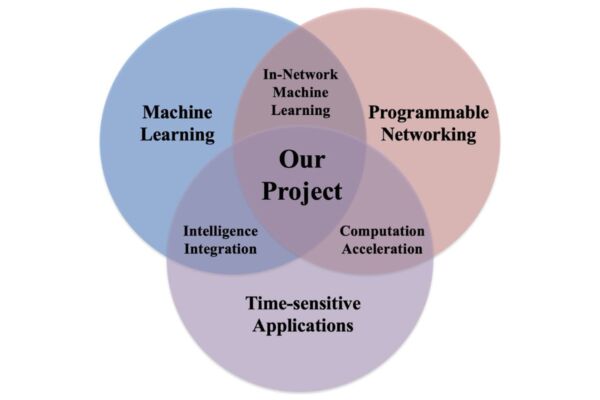

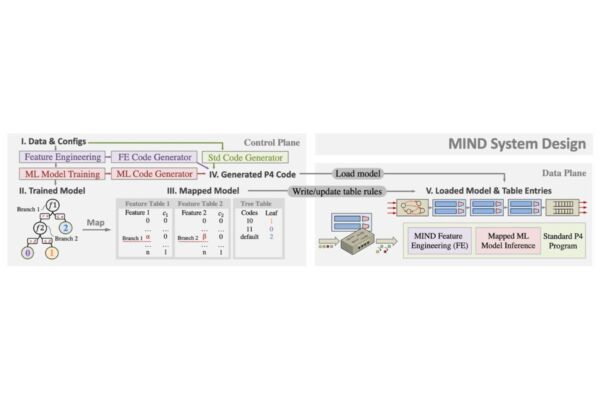

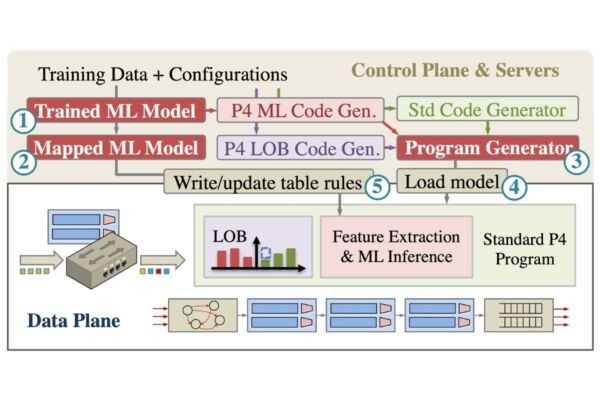

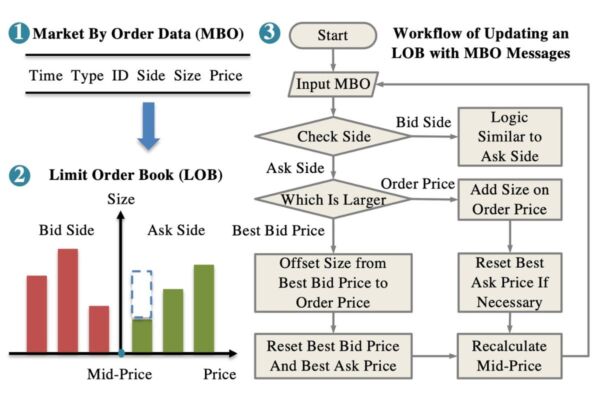

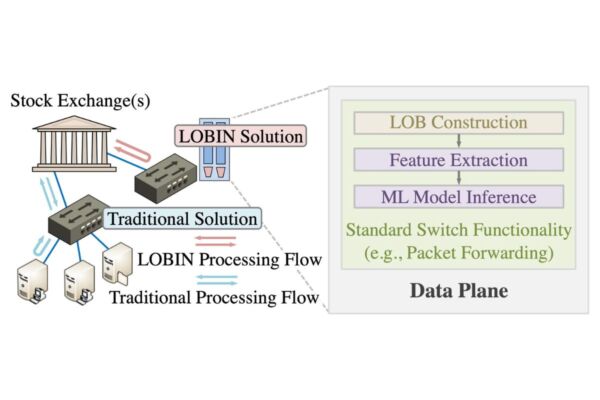

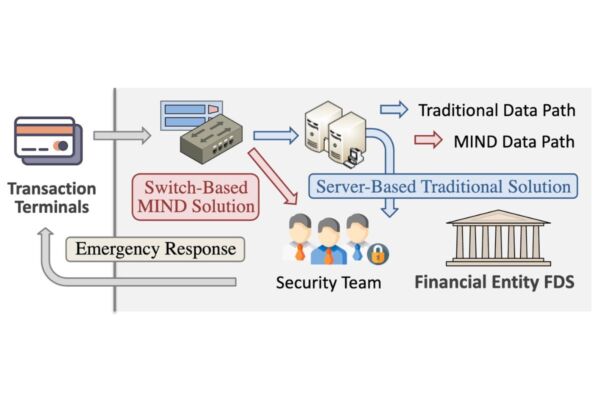

The rise of machine learning (ML) in time-sensitive applications like autonomous vehicles and financial trading demands ultra-fast response times. Traditional ML frameworks often struggle with latency and performance. This research introduces in-network ML, offloading ML inference to network devices such as switches and NICs, achieving up to 800x faster response times and up to 1000x lower power consumption compared to server-based solutions. By embedding ML directly within the network, data traffic is reduced, and efficiency is enhanced for real-time AI applications

High-performance synthetic spider silk for durable textiles

Queen Mary University of London

Biodegradable plant-based coatings for plastic-free packaging

Sustainable 3D-printed ceramic filters for metal purification